Google Deep Mind and Game AI

Earlier this year, Google Engineers published results about an neural network that was able to play old Atari games. The clue, however, was that this AI hadn't be designed to play those particular games, or more precisely, not to play Atari games in general, either. Using reinforcement learning (a technique that is basically just trial and error in the start and becomes more sophisticated with every try) Deep Mind learned to play games on its very own.

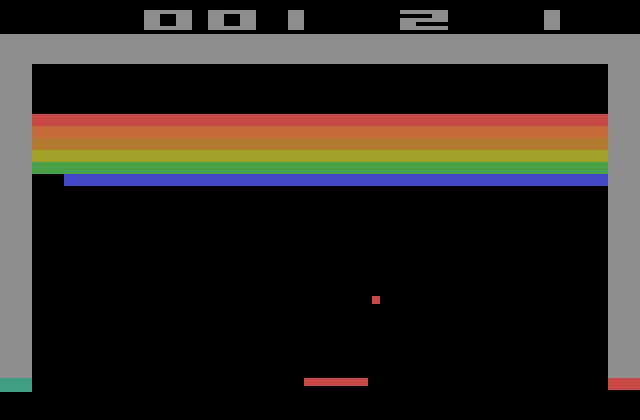

As input, it received a video stream that was produced by the Atari. As output it was allowed to create simple joystick commands (steering direction + two buttons) that would then be retrieved by a virtual joystick that sent the corresponding signals to the Atari (or so I guess, without finding further details about this on the web).

Google published a list of the games that Deep Mind tried to master together with information how well it did one the various games. The results range from '20 times as good as the best human' to 'totally shitty, as bad as a blind monkey'. While the former have been widely picked up and hyped by the media, the latter seem to be widely ignored. And while I don't doubt the achievement itself (they made a generic AI that learns tasks on its very own, only being fed by a videostream) I don't like the way people focus on the numbers mentioned above (they made a generic AI that is 20 times as good as the best human...). An AI that is 20 times as good as the best human in breakout does not have to be smart, as mastering breakout obviously depends on reaction and precision much more than it depends on brains. On the other hand, if the consumers focused more on the games where Deep Mind failed, they might learn so much more about the restrictions that reinforment learning suffers from. And if they did, there wouldn't be so many discussions in gaming forums about whether 'we will soon have super smart cops/criminals/pedestrians in GTA' (and which made me write this post in the first place). The question to these discussions is plainly: No. We won't have super smart whatsoever relevant game AI that is driven by a neural network (or a neural turing machine, i.e. a neural network that has a memory). One reason for this is the fact that reinforcement learning can only be successfully applied when action and result are closely related (timewise).

In other games that are more complex and yet by far not as complex as today's blockbusters (e.g. Montezuma's Revenge) the actions (moving the joystick) and rewards (getting points) are much more loosely connected. The AI makes 0% w.r.t. to a human player here. The reason for this disastrous result is that trying random actions with a joystick won't earn you a single score point in Montezuma's Revenge. So without ever being rewarded the AI can't learn what's wrong and what's right. It is worth noting that this will be the same for every game that involves any non-trivial boundaries, walls, or even a maze through which to maneuver is part of the game. Wait, you'll say, Deep Mind doesn't suck at Pacman, according to the list it makes 13% (of human skill level). Well, Pacman's streets are paved with score, you could say. Moving trough the maze means collecting points - at least until you did collect them. And doing more complex actions, even like finding its path to a section of the pacman maze where there are still points left to collect is completely out of scope for Deep Mind so far. And that's why it won't stand a chance against traditional AIs (who are presented with a high level abstraction navigation mesh of their surroundings) for a couple of decades.